Qianzhong Chen’s Website

I’m a first year PhD student of Stanford Aero-Astro Department, advised by Dr. Mac Schwager. Previous to that, I was a research assistant at UIUC-ACRL, advised by Dr. Naira Hovakimyan and Dr. Sheng Cheng.

I also spent time at xdof.ai, Unitree, working as robotics research intern. I was fortunate to be advised by Philipp Wu (xdof.ai), Fred Shentu (xdof.ai).

My ultimate goal is to build a general purpose robot that can conduct complex manipulation tasks for people in both home and factory. My current research interests include robot VLA model, world model, robot policy reward modeling and reinforcement learning. I also did research on end-to-end drone navigation, dorne VLA, legged robot locomotion, and differentiable simulation previously.

I received my Bachelor’s degree in Mechanical Engineering from both Zhejiang University and UIUC in 2023. I received my Master’s degree in Mechanical Engineering from Stanford University in 2025.

My Resume can be found here (updated Dec. 2025).

Feel free to contact me via email (qchen23 {at} stanford.edu), linkedin or WeChat: CQZ_David.

Selected Publications (Full list)

Q. Chen, J. Yu, M. Schwager, P. Abbeel, F. Shentu, P. Wu

arXiv | website | LeRobot | code

TL;DR: SARM is a stage-aware, video-based reward modeling framework that enables scalable and robust imitation learning for long-horizon tasks by deriving progress signals from natural language annotations, dramatically improving policy performance over standard behavior cloning.

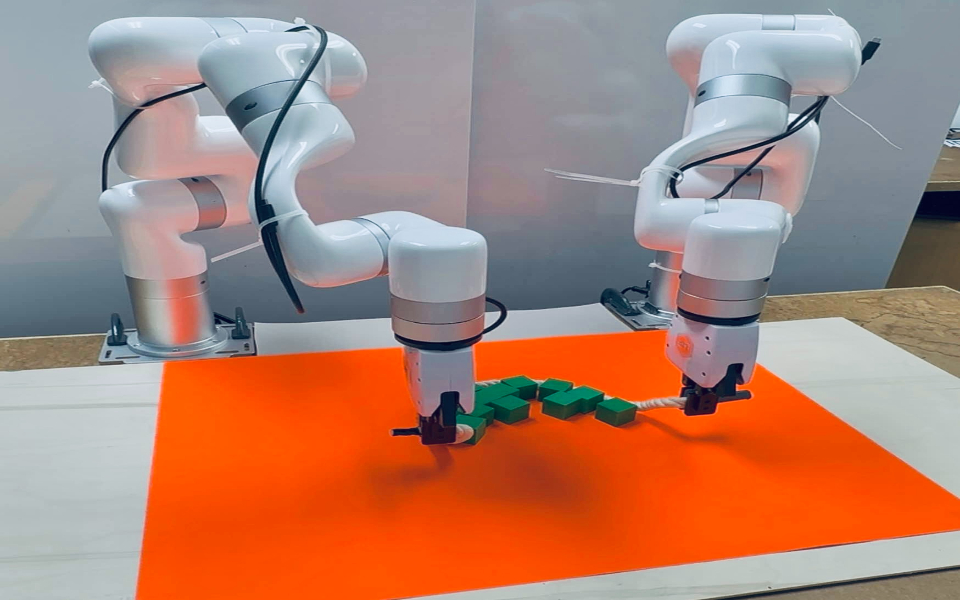

S. Huang, Q. Chen, X. Zhang, J. Sun, M. Schwager

arXiv | website | code

TL;DR: A state-of-the-art 3D world model trained directly from point clouds, which enables accurate dynamics prediction across multi-object, multi-material scenarios and empowers model-based visuomotor control in robotic manipulation tasks.

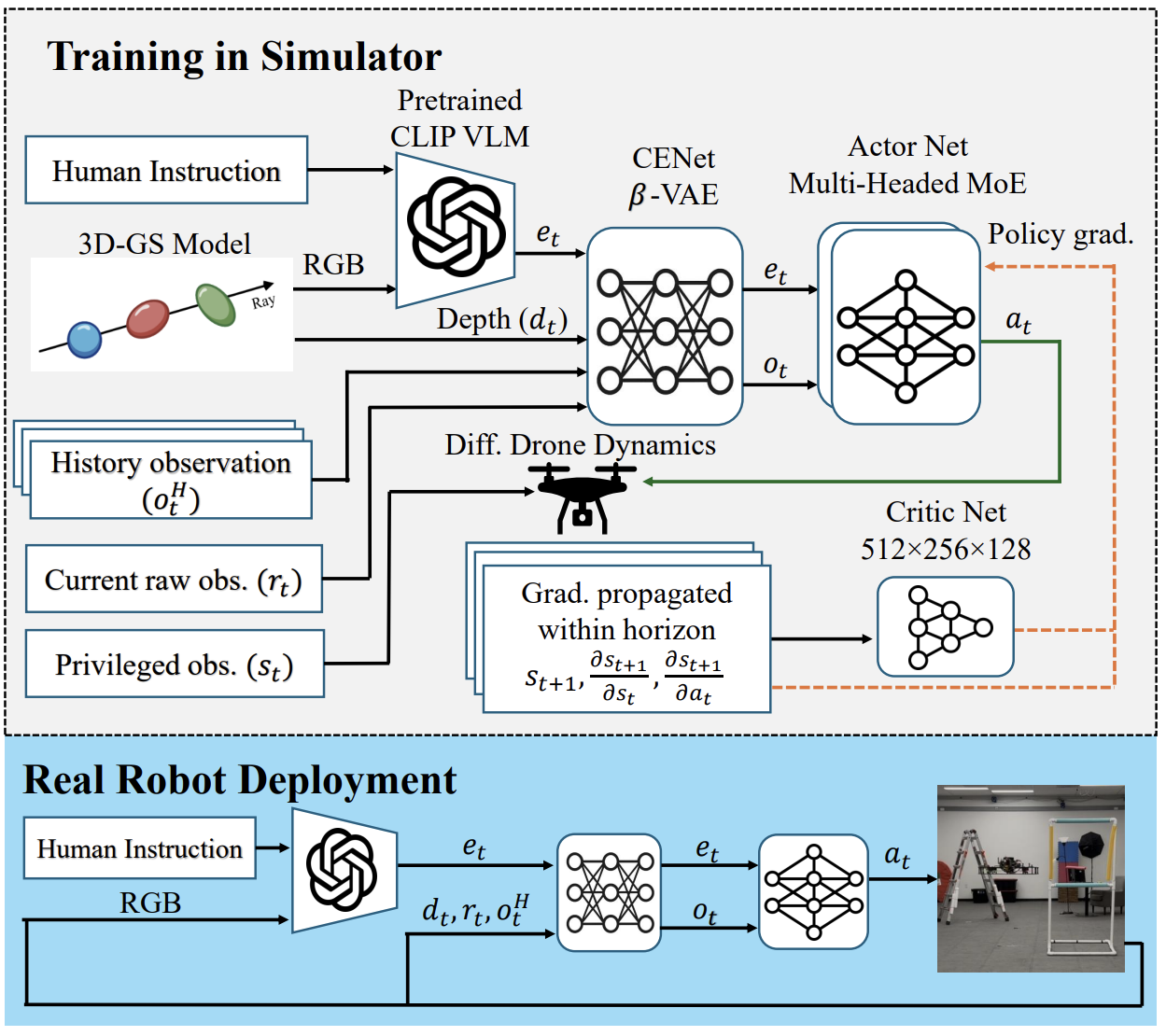

Q. Chen, N. Gao, S. Huang, J. Low, T. Chen, J. Sun, M. Schwager

arXiv | website | code

TL;DR: GRaD-Nav++ is a lightweight, fully onboard Vision-Language-Action framework that enables drones to follow natural language commands in real time using DiffRL training in a 3DGS simulator, achieving strong generalization across tasks and environments both in simulation and on real hardware.

Recent news

- 2026/01: 🎉🎉 Our new paper SARM on Robot Manipulation Reward Modeling has been accepted to ICLR 2026!

- 2026/01: 🚀🚀 SARM is now natively supported in LeRobot! Thanks huggingface🤗!

- 2025/11: 🎉🎉 Our new paper GRaD-Nav++ on drone VLA has been accepted to RA-L 2025!

- 2025/08: 🎉🎉 Our new paper ARCH on RL for manipulations has been accepted to CoRL 2025!

- 2025/08: 🎉🎉 Our new paper ParticleFormer on 3D world model for manipulations has been accepted to CoRL 2025!

- 2025/04: ✨✨ I was admitted to the Aeronautics and Astronautics Department, Stanford University as a PhD student, supervised by Dr. Mac Schwager.

Honors and awards

- Stanford Aero-Astro PhD Fellowship (2025)

- Outstanding Undergraduate Thesis Award, Department of Mechanical Engineering, Zhejiang University (2023)

- First Class Academic Scholarship of ZJU-UIUC Institute (Top 1%) (2022)

Service

- Journal Articles Reviewer: IEEE RA-L (2025), IEEE IoT (2025), IEEE TIE (2025)

- Conference Reviewer: IROS (2025), ICRA (2026)

- Member of the IEEE Robotics and Automation Society